Publications

2026

-

UI Remix: Supporting UI Design Through Interactive Example Retrieval and RemixingJunling Wang , Hongyi Lan , Xiaotian Su, and 2 more authorsIn Proceedings of the 31st International Conference on Intelligent User Interfaces , 2026

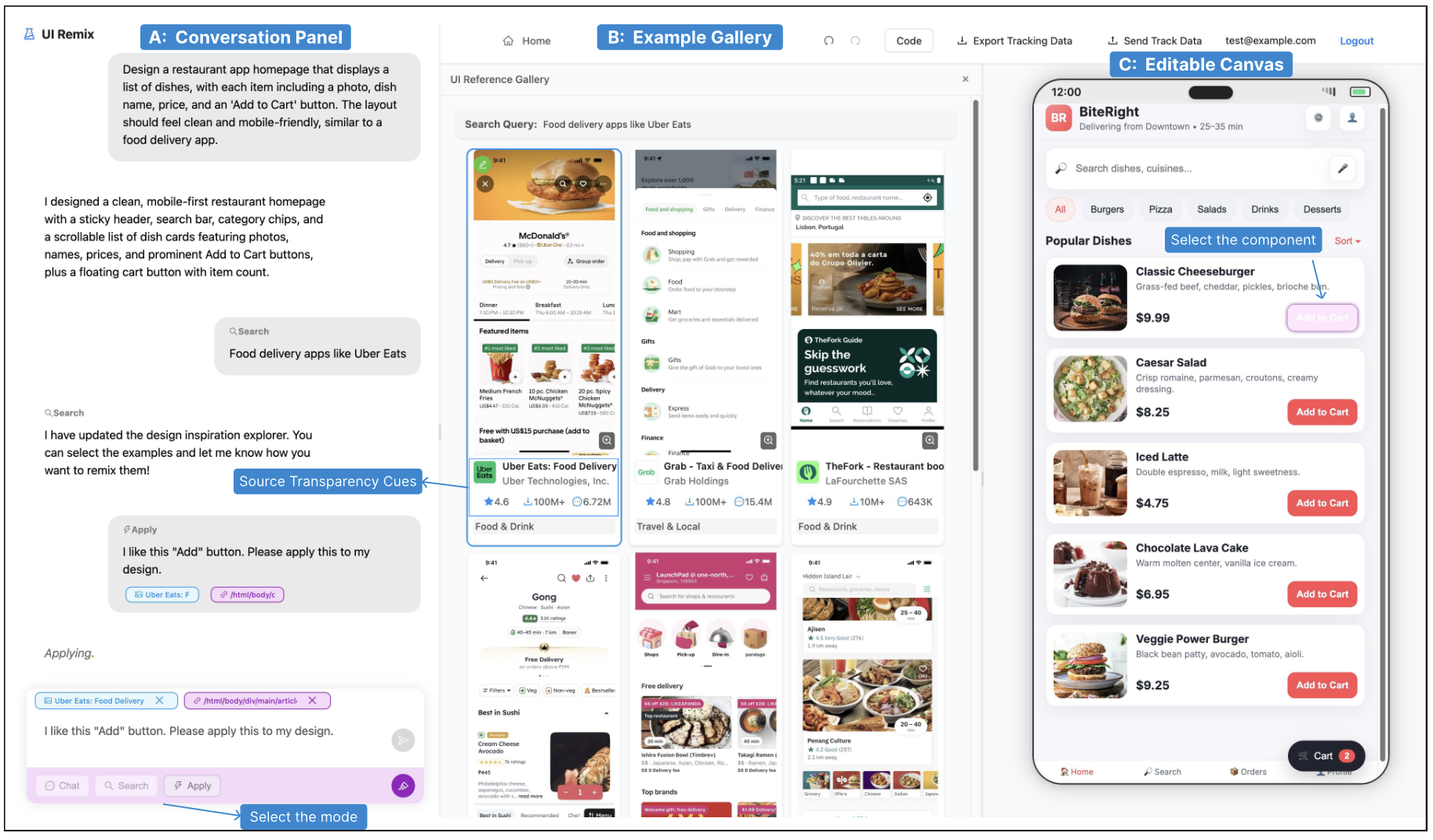

UI Remix: Supporting UI Design Through Interactive Example Retrieval and RemixingJunling Wang , Hongyi Lan , Xiaotian Su, and 2 more authorsIn Proceedings of the 31st International Conference on Intelligent User Interfaces , 2026Designing user interfaces (UIs) is a critical step when launching products, building portfolios, or personalizing projects, yet end users without design expertise often struggle to articulate their intent and to trust design choices. Existing example-based tools either promote broad exploration, which can cause overwhelm and design drift, or require adapting a single example, risking design fixation. We present UI Remix, an interactive system that supports mobile UI design through example-driven exploration. Powered by a multimodal retrieval-augmented generation (MMRAG) model, UI Remix enables iterative search, selection, and adaptation of examples at both the global (whole interface) and local (component) level. To foster trust, it presents source transparency cues such as ratings, downloads, and developer information. In an empirical study with 24 end users, UI Remix significantly improved participants’ ability to achieve their design goals, facilitated effective iteration, and encouraged exploration of alternative designs. Participants also reported that source transparency cues enhanced their confidence in adapting examples. Our findings suggest new directions for AI-assisted, example-driven systems that empower end users to design with greater control, trust, and openness to exploration.

@inproceedings{Wang-etal-2026-UIRemix, author = {Wang, Junling and Lan, Hongyi and Su, Xiaotian and Dogan, Mustafa Doga and Wang, April Yi}, title = {UI Remix: Supporting UI Design Through Interactive Example Retrieval and Remixing}, year = {2026}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, booktitle = {Proceedings of the 31st International Conference on Intelligent User Interfaces}, series = {IUI '26}, }

2025

-

Emotionally Aware Moderation: The Potential of Emotion Monitoring in Shaping Healthier Social Media ConversationsXiaotian Su, Naim Zierau , Soomin Kim , and 2 more authorsIn Proceedings of the ACM on Human-Computer Interaction, Volume 9 , 2025

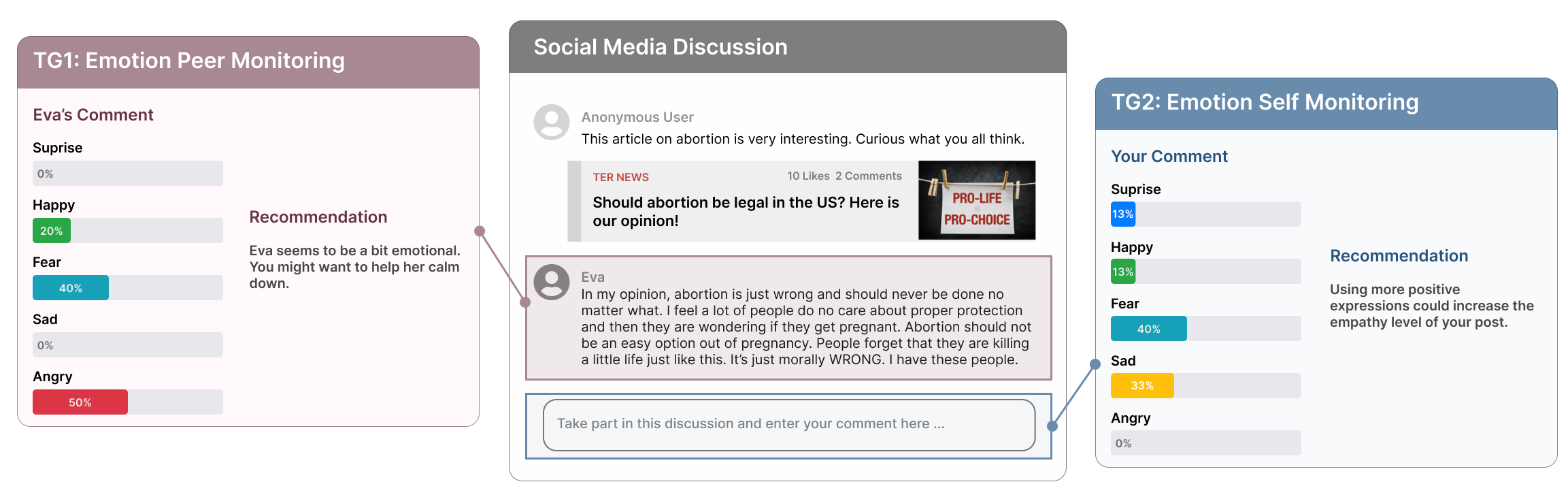

Emotionally Aware Moderation: The Potential of Emotion Monitoring in Shaping Healthier Social Media ConversationsXiaotian Su, Naim Zierau , Soomin Kim , and 2 more authorsIn Proceedings of the ACM on Human-Computer Interaction, Volume 9 , 2025Social media platforms increasingly employ proactive moderation techniques, such as detecting and curbing toxic and uncivil comments, to prevent the spread of harmful content. Despite these efforts, such approaches are often criticized for creating a climate of censorship and failing to address the underlying causes of uncivil behavior. Our work makes both theoretical and practical contributions by proposing and evaluating two types of emotion monitoring dashboards to enhance users’ emotional awareness and mitigate hate speech. In a study involving 211 participants, we evaluate the effects of the two mechanisms on user commenting behavior and emotional experiences. The results reveal that these interventions effectively increase users’ awareness of their emotional states and reduce hate speech. However, our findings also indicate potential unintended effects, including increased expression of negative emotions (Angry, Fear, and Sad) when discussing sensitive issues. These insights provide a basis for further research on integrating proactive emotion regulation tools into social media platforms to foster healthier digital interactions.

@inproceedings{Su-etal-2025-Emotion, author = {Su, Xiaotian and Zierau, Naim and Kim, Soomin and Wang, April Yi and Wambsganss, Thiemo}, title = {Emotionally Aware Moderation: The Potential of Emotion Monitoring in Shaping Healthier Social Media Conversations}, year = {2025}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3757472}, doi = {10.1145/3757472}, booktitle = {Proceedings of the ACM on Human-Computer Interaction, Volume 9}, articleno = {291}, numpages = {28}, keywords = {social media, proactive intervention, emotion monitoring}, series = {CSCW '25}, paper = {CSCW25_emotion.pdf}, } -

The Stress of Improvisation: Instructors’ Perspectives on Live Coding in Programming ClassesXiaotian Su, and April Yi WangIn Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems , 2025

The Stress of Improvisation: Instructors’ Perspectives on Live Coding in Programming ClassesXiaotian Su, and April Yi WangIn Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems , 2025Live coding is a pedagogical technique in which an instructor writes and executes code in front of students to impart skills like incremental development and debugging. Although live coding offers many benefits, instructors face many challenges in the classroom, like cognitive challenges and psychological stress, most of which have yet to be formally studied. To understand the obstacles faced by instructors in CS classes, we conducted (1) a formative interview with five teaching assistants in exercise sessions and (2) a contextual inquiry study with four lecturers for large-scale classes. We found that the improvisational and unpredictable nature of live coding makes it difficult for instructors to manage their time and keep students engaged, resulting in more mental stress than presenting static slides. We discussed opportunities for augmenting existing IDEs and presentation setups to help enhance live coding experience.

@inproceedings{Su-etal-2025-Stress, author = {Su, Xiaotian and Wang, April Yi}, title = {The Stress of Improvisation: Instructors' Perspectives on Live Coding in Programming Classes}, year = {2025}, isbn = {9798400713958}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3706599.3719993}, doi = {10.1145/3706599.3719993}, booktitle = {Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems}, articleno = {525}, numpages = {6}, keywords = {live coding, programming education at scale}, series = {CHI EA '25}, paper = {CHI25LBW_live.pdf}, } -

Do It For Me vs. Do It With Me: Investigating User Perceptions of Different Paradigms of Automation in Copilots for Feature-Rich SoftwareAnjali Khurana , Xiaotian Su, April Yi Wang , and 1 more authorIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems , 2025

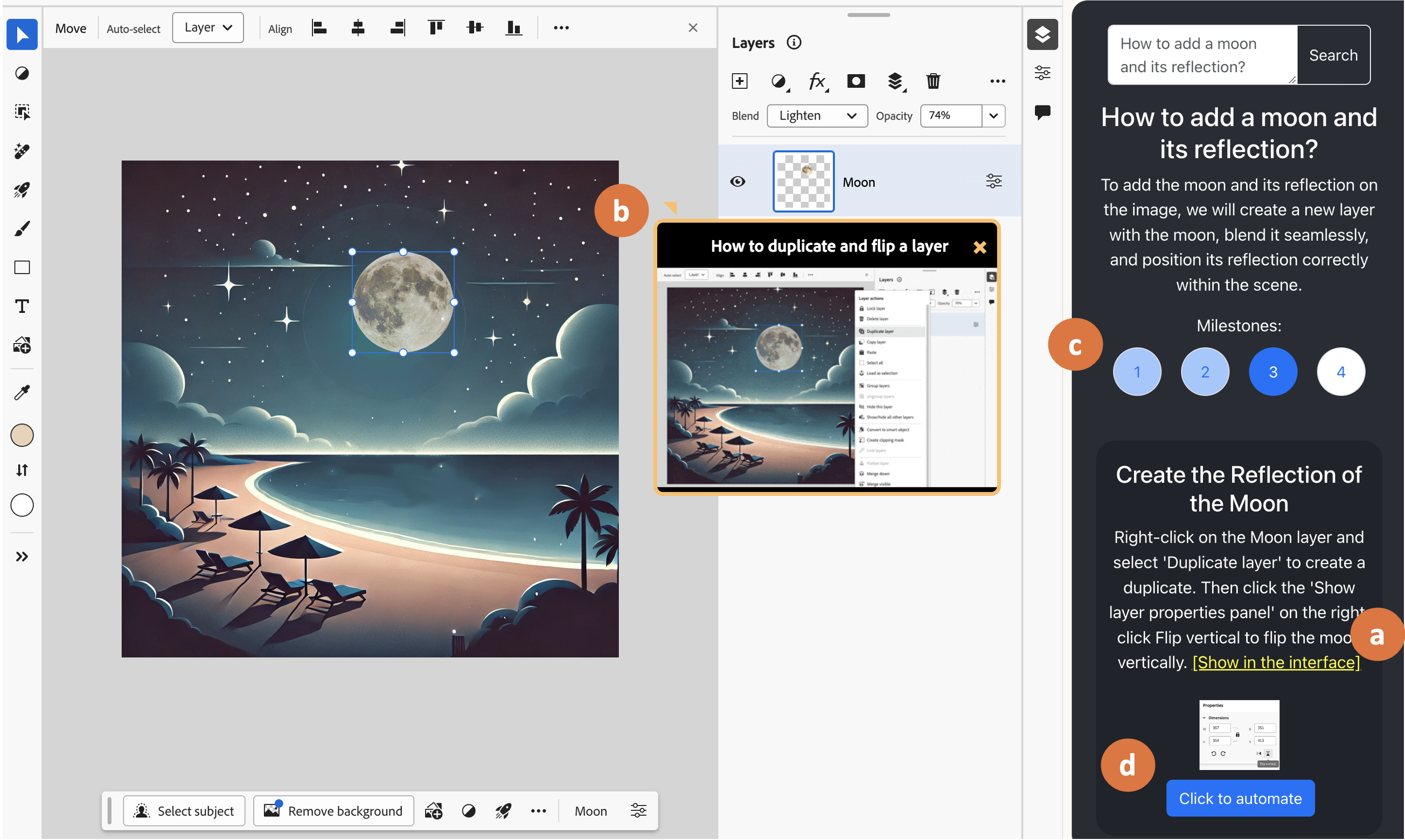

Do It For Me vs. Do It With Me: Investigating User Perceptions of Different Paradigms of Automation in Copilots for Feature-Rich SoftwareAnjali Khurana , Xiaotian Su, April Yi Wang , and 1 more authorIn Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems , 2025Large Language Model (LLM)-based in-application assistants, or copilots, can automate software tasks, but users often prefer learning by doing, raising questions about the optimal level of automation for an effective user experience. We investigated two automation paradigms by designing and implementing a fully automated copilot (AutoCopilot) and a semi-automated copilot (GuidedCopilot) that automates trivial steps while offering step-by-step visual guidance. In a user study (N=20) across data analysis and visual design tasks, GuidedCopilot outperformed AutoCopilot in user control, software utility, and learnability, especially for exploratory and creative tasks, while AutoCopilot saved time for simpler visual tasks. A follow-up design exploration (N=10) enhanced GuidedCopilot with task-and state-aware features, including in-context preview clips and adaptive instructions. Our findings highlight the critical role of user control and tailored guidance in designing the next generation of copilots that enhance productivity, support diverse skill levels, and foster deeper software engagement.

@inproceedings{Khurana-etal-2025-Investigating, author = {Khurana, Anjali and Su, Xiaotian and Wang, April Yi and Chilana, Parmit K}, title = {Do It For Me vs. Do It With Me: Investigating User Perceptions of Different Paradigms of Automation in Copilots for Feature-Rich Software}, year = {2025}, isbn = {9798400713941}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3706598.3713431}, doi = {10.1145/3706598.3713431}, booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems}, articleno = {880}, numpages = {18}, keywords = {feature-rich software; large language models; software copilots; user control; semi-automation; human-AI collaboration}, series = {CHI '25}, paper = {CHI25_GuidedCopilot.pdf}, }

2024

-

Closing the Loop: Learning to Generate Writing Feedback via Language Model Simulated Student RevisionsInderjeet Jayakumar Nair , Jiaye Tan , Xiaotian Su, and 3 more authorsIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing , Nov 2024

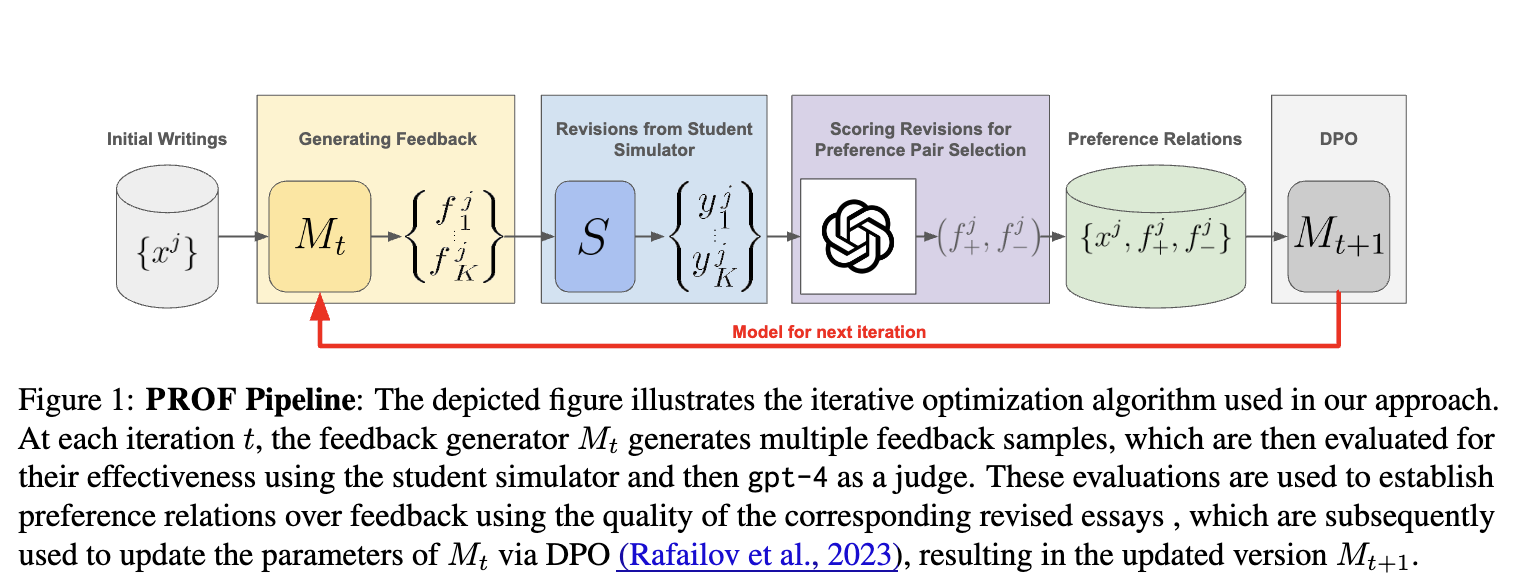

Closing the Loop: Learning to Generate Writing Feedback via Language Model Simulated Student RevisionsInderjeet Jayakumar Nair , Jiaye Tan , Xiaotian Su, and 3 more authorsIn Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing , Nov 2024Providing feedback is widely recognized as crucial for refining students’ writing skills. Recent advances in language models (LMs) have made it possible to automatically generate feedback that is actionable and well-aligned with human-specified attributes. However, it remains unclear whether the feedback generated by these models is truly effective in enhancing the quality of student revisions. Moreover, prompting LMs with a precise set of instructions to generate feedback is nontrivial due to the lack of consensus regarding the specific attributes that can lead to improved revising performance. To address these challenges, we propose PROF that PROduces Feedback via learning from LM simulated student revisions. PROF aims to iteratively optimize the feedback generator by directly maximizing the effectiveness of students’ overall revising performance as simulated by LMs. Focusing on an economic essay assignment, we empirically test the efficacy of PROF and observe that our approach not only surpasses a variety of baseline methods in effectiveness of improving students’ writing but also demonstrates enhanced pedagogical values, even though it was not explicitly trained for this aspect.

@inproceedings{nair-etal-2024-closing, title = {Closing the Loop: Learning to Generate Writing Feedback via Language Model Simulated Student Revisions}, author = {Nair, Inderjeet Jayakumar and Tan, Jiaye and Su, Xiaotian and Gere, Anne and Wang, Xu and Wang, Lu}, editor = {Al-Onaizan, Yaser and Bansal, Mohit and Chen, Yun-Nung}, booktitle = {Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing}, month = nov, year = {2024}, address = {Miami, Florida, USA}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2024.emnlp-main.928}, pages = {16636--16657}, paper = {ACL24_loop.pdf}, } -

Enhancing Peer Review with AI-Powered Suggestion Generation Assistance: Investigating the Design DynamicsSeyed Parsa Neshaei , Roman Rietsche , Xiaotian Su, and 1 more authorIn Proceedings of the 29th International Conference on Intelligent User Interfaces , Nov 2024

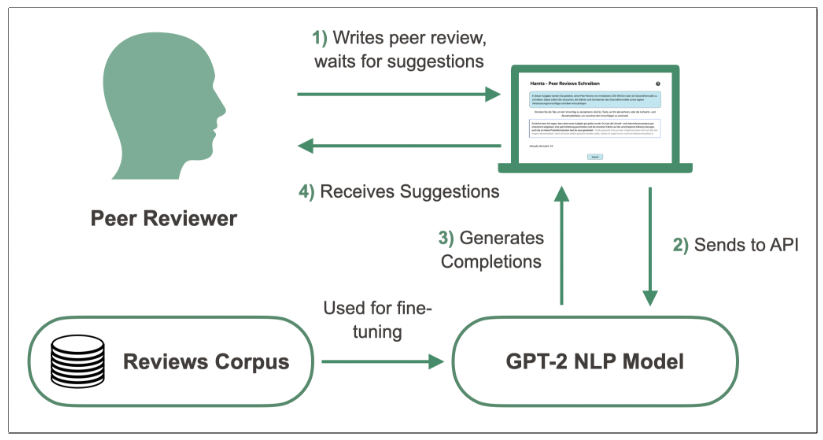

Enhancing Peer Review with AI-Powered Suggestion Generation Assistance: Investigating the Design DynamicsSeyed Parsa Neshaei , Roman Rietsche , Xiaotian Su, and 1 more authorIn Proceedings of the 29th International Conference on Intelligent User Interfaces , Nov 2024While writing peer reviews resembles an important task in science, education, and large organizations, providing fruitful suggestions to peers is not a straightforward task, as different user interaction designs of text suggestion interfaces can have diverse effects on user behaviors when writing the review text. Generative language models might be able to support humans in formulating reviews with textual suggestions. Previous systems use two designs for providing text suggestions, but do not empirically evaluate them: inline and list of suggestions. To investigate the effects of embedding NLP text generation models in the two designs, we collected user requirements to implement Hamta as an example of assistants providing reviewers with text suggestions. Our experiment on comparing the two designs on 31 participants indicates that people using the inline interface provided longer reviews on average, while participants using the list of suggestions experienced more ease of use in using our tool. The results shed light on important design findings for embedding text generation models in user-centered assistants.

@inproceedings{10.1145/3640543.3645169, author = {Neshaei, Seyed Parsa and Rietsche, Roman and Su, Xiaotian and Wambsganss, Thiemo}, title = {Enhancing Peer Review with AI-Powered Suggestion Generation Assistance: Investigating the Design Dynamics}, year = {2024}, isbn = {9798400705083}, publisher = {Association for Computing Machinery}, address = {New York, NY, USA}, url = {https://doi.org/10.1145/3640543.3645169}, doi = {10.1145/3640543.3645169}, booktitle = {Proceedings of the 29th International Conference on Intelligent User Interfaces}, pages = {88–102}, numpages = {15}, keywords = {generative models, intelligent writing assistants, machine learning, natural language processing, peer reviews, text generation}, location = {<conf-loc>, <city>Greenville</city>, <state>SC</state>, <country>USA</country>, </conf-loc>}, series = {IUI '24}, paper = {IUI24_enhancing.pdf}, }

2023

-

Unraveling Downstream Gender Bias from Large Language Models: A Study on AI Educational Writing AssistanceXiaotian Su*, Thiemo Wambsganss* , Vinitra Swamy , and 3 more authorsIn Findings of the Association for Computational Linguistics: EMNLP 2023 , Dec 2023

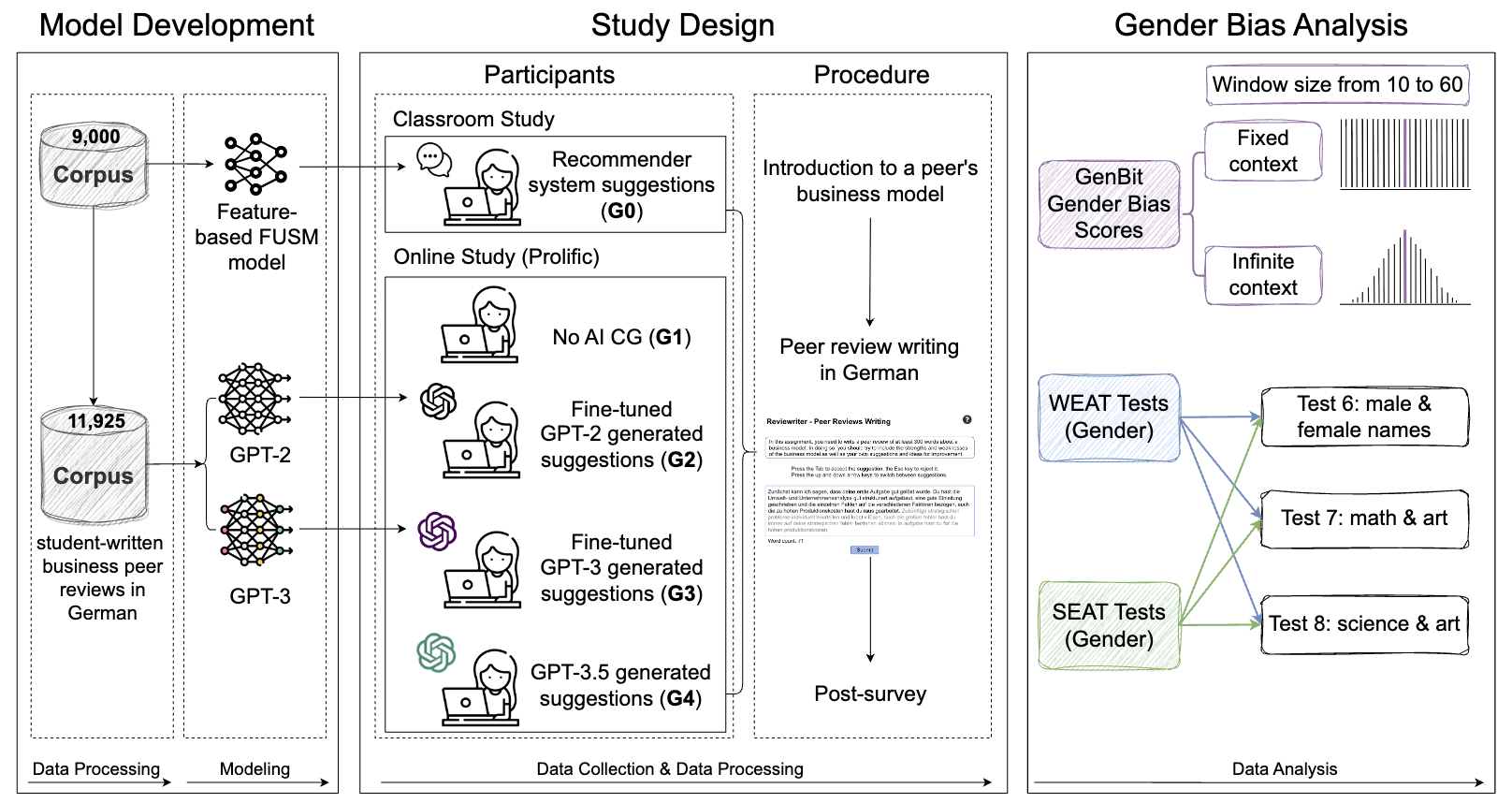

Unraveling Downstream Gender Bias from Large Language Models: A Study on AI Educational Writing AssistanceXiaotian Su*, Thiemo Wambsganss* , Vinitra Swamy , and 3 more authorsIn Findings of the Association for Computational Linguistics: EMNLP 2023 , Dec 2023Large Language Models (LLMs) are increasingly utilized in educational tasks such as providing writing suggestions to students. Despite their potential, LLMs are known to harbor inherent biases which may negatively impact learners. Previous studies have investigated bias in models and data representations separately, neglecting the potential impact of LLM bias on human writing. In this paper, we investigate how bias transfers through an AI writing support pipeline. We conduct a large-scale user study with 231 students writing business case peer reviews in German. Students are divided into five groups with different levels of writing support: one in-classroom group with recommender system feature-based suggestions and four groups recruited from Prolific – a control group with no assistance, two groups with suggestions from fine-tuned GPT-2 and GPT-3 models, and one group with suggestions from pre-trained GPT-3.5. Using GenBit gender bias analysis and Word Embedding Association Tests (WEAT), we evaluate the gender bias at various stages of the pipeline: in reviews written by students, in suggestions generated by the models, and in model embeddings directly. Our results demonstrate that there is no significant difference in gender bias between the resulting peer reviews of groups with and without LLM suggestions. Our research is therefore optimistic about the use of AI writing support in the classroom, showcasing a context where bias in LLMs does not transfer to students’ responses.

@inproceedings{wambsganss-etal-2023-unraveling, title = {Unraveling Downstream Gender Bias from Large Language Models: A Study on {AI} Educational Writing Assistance}, author = {Su*, Xiaotian and Wambsganss*, Thiemo and Swamy, Vinitra and Neshaei, Seyed and Rietsche, Roman and K{\"a}ser, Tanja}, editor = {Bouamor, Houda and Pino, Juan and Bali, Kalika}, booktitle = {Findings of the Association for Computational Linguistics: EMNLP 2023}, month = dec, year = {2023}, address = {Singapore}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2023.findings-emnlp.689}, doi = {10.18653/v1/2023.findings-emnlp.689}, pages = {10275--10288}, paper = {EMNLP23_bias.pdf}, } -

Reviewriter: AI-Generated Instructions For Peer Review WritingXiaotian Su, Thiemo Wambsganss , Roman Rietsche , and 2 more authorsIn Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023) , Jul 2023

Reviewriter: AI-Generated Instructions For Peer Review WritingXiaotian Su, Thiemo Wambsganss , Roman Rietsche , and 2 more authorsIn Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023) , Jul 2023Large Language Models (LLMs) offer novel opportunities for educational applications that have the potential to transform traditional learning for students. Despite AI-enhanced applications having the potential to provide personalized learning experiences, more studies are needed on the design of generative AI systems and evidence for using them in real educational settings. In this paper, we design, implement and evaluate }textttReviewriter, a novel tool to provide students with AI-generated instructions for writing peer reviews in German. Our study identifies three key aspects: a) we provide insights into student needs when writing peer reviews with generative models which we then use to develop a novel system to provide adaptive instructions b) we fine-tune three German language models on a selected corpus of 11,925 student-written peer review texts in German and choose German-GPT2 based on quantitative measures and human evaluation, and c) we evaluate our tool with fourteen students, revealing positive technology acceptance based on quantitative measures. Additionally, the qualitative feedback presents the benefits and limitations of generative AI in peer review writing.

@inproceedings{su-etal-2023-reviewriter, title = {Reviewriter: {AI}-Generated Instructions For Peer Review Writing}, author = {Su, Xiaotian and Wambsganss, Thiemo and Rietsche, Roman and Neshaei, Seyed Parsa and K{\"a}ser, Tanja}, editor = {Kochmar, Ekaterina and Burstein, Jill and Horbach, Andrea and Laarmann-Quante, Ronja and Madnani, Nitin and Tack, Ana{\"\i}s and Yaneva, Victoria and Yuan, Zheng and Zesch, Torsten}, booktitle = {Proceedings of the 18th Workshop on Innovative Use of NLP for Building Educational Applications (BEA 2023)}, month = jul, year = {2023}, address = {Toronto, Canada}, publisher = {Association for Computational Linguistics}, url = {https://aclanthology.org/2023.bea-1.5}, doi = {10.18653/v1/2023.bea-1.5}, pages = {57--71}, paper = {BEA23_reviewriter.pdf}, }